ウェブアプリのウェブワーカーに関するベストプラクティス

ウェブワーカーは、JavaScriptをメインブラウザスレッドとは独立してバックグラウンドで実行できるようにするモダンウェブブラウザの機能です。詳細については、ウェブワーカーの使用を参照してください。

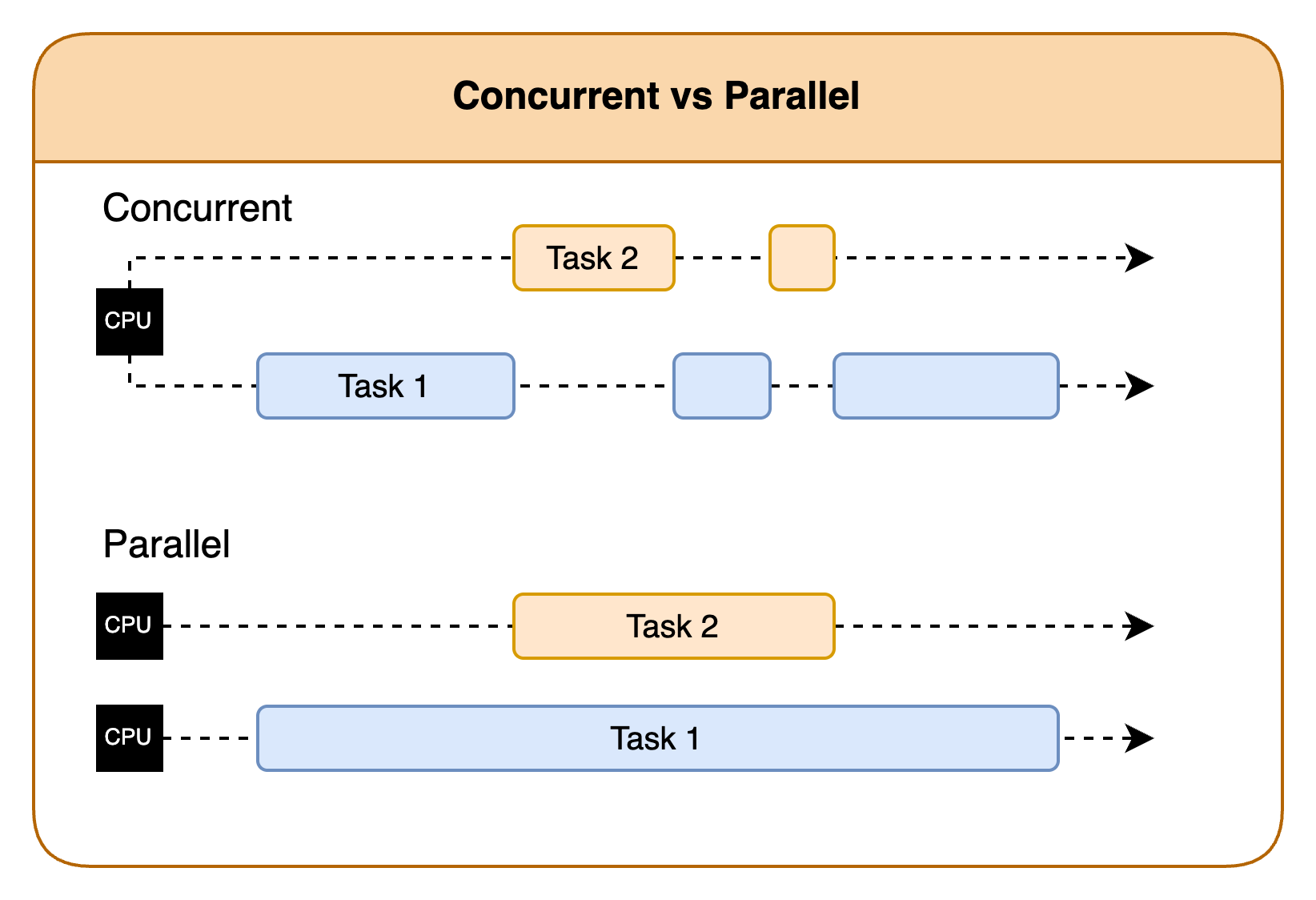

この実行モデルは、メインスレッドで非同期操作を管理する並行処理ではなく、本当の並列処理です。並行処理のコードでは、非同期タスクの計算負荷が高いとUIのブロッキングを防ぐことができませんが、ウェブワーカーでは、作業をオフロードすることで明示的にUIのブロッキングを防止します。このように、ウェブワーカーは適切に使用すれば強力なツールになります。

Chromiumで使用されるレンダリングエンジンはBlinkです。このエンジンでは、ウェブアプリのパフォーマンスを最大化するように設計されたスレッドプールアルゴリズムが使用されます。これは固定スレッドプールやキャッシュスレッドプールよりも複雑で、小規模なワーカーの大量呼び出しに優れています。Blinkは、CPUコア間で並列処理と並行処理のバランスを取ってコアを最大限に使用します。つまり、スレッドとリソースは、リソース制限やサンドボックスを含めてChromiumによって管理されます。作成するウェブワーカーの数を決定するのはウェブアプリの役割で、タスクの種類、複雑さ、利用可能なシステムリソースに基づいて適切な数を設定します。ウェブアプリでは、ワーカーとメインスレッド間の通信を管理する必要があります。ウェブアプリはさらに、バッチ処理、優先順位の決定、一度に送信されるワーカー数の制限などの戦略を使用して、通信のオーバーヘッドを最小限に抑える必要もあります。

Vega WebViewでのウェブワーカー

Vegaはリソースの少ないデバイスで動作することが多いため、スレッドの数はデバイスのコアの数に制限する必要があります。JavaScriptには、デバイスに搭載されているコアの数を特定するnavigator.hardwareConcurrencyが用意されています。最小仕様のデバイスのコアは4個ですが、ハイエンドのデバイスにはより多くのコアが搭載されています。使用されるアルゴリズムは、number_of_workers = (2 * number_of_cores) + 1です。たとえば、デバイスのコアが4個の場合、最大9個のワーカーが必要になります。場合によっては、ワーカーの動作に応じて、システムはこれよりも小さい数や大きい数に対応することがあります。ウェブワーカーのライフサイクルを理解すると、このような状況のいくつかに説明がつき、リソースの過剰な登録が問題となる理由もわかります。

ウェブワーカーの通常のライフサイクルでは、1)メインスレッドが新しいワーカーを作成し、2)メッセージをポストします。次に、3)ワーカーがメッセージを処理し、4)メインスレッドに新しいメッセージをポストします。その後、5)返されたメッセージをメインスレッドが処理します。メインスレッドとの通信は、メインスレッドのパフォーマンスに影響を及ぼします。つまり、同時期に多数の小規模なワーカーが作成されて終了し、それらのすべてがメインスレッドにデータを送り返して処理を要求すると、メインスレッドに影響が生じることになります。これは、レスポンスが同時実行で処理される場合でも同じです。以下では、ウェブワーカーのパフォーマンスを最大限に高めるための戦略をいくつか示します。

Vega向けのウェブワーカーのガイドライン

- ウェブワーカーは本当に有益なタスクに使用し、それらがどのイベントによってトリガーされるかを把握して注意を払います。ウェブワーカーの数が多すぎると、Fire TV Stick 4K Selectなどのリソースの少ないデバイスは過負荷になる可能性があります。

- ワーカーとメインスレッド間で受け渡されるデータの量を削減します。

- 大量のデータのコピーが行われるのを避けるために、移譲可能オブジェクトを使用します。

// 移譲可能オブジェクトを使用することで大量のデータのコピーを避けます。 const data = new Uint8Array(1024 * 1024); // 1MBのデータ worker.postMessage(data, [data.buffer]); // Transfer the underlying ArrayBuffer

- 大量のデータのコピーが行われるのを避けるために、移譲可能オブジェクトを使用します。

- ワーカーを管理し、いつ不要になるかを把握します。終了したワーカーでメインスレッドがいっぱいになるのを防ぐために、terminateを使用して不要になったワーカーを削除します。

// 不要になったワーカーを終了します。 worker.terminate(); - 新しいワーカーを生成する代わりに、可能な場合はワーカーまたはワーカープールを再利用します。

const worker = new Worker('worker.js'); worker.postMessage('メインスレッドからの指示'); // ... その後 ... // 新しいタスクをポストしてワーカーを再利用します。 worker.postMessage('新しいタスク'); - キャッシュを使用して再計算を減らします。

const cache = new Map<string, CachedData>(); // 効率的なIDベースのルックアップのためにマップを使用 self.onmessage = async (event: MessageEvent) => { const { type, payload } = event.data; const { id, forceRefresh } = payload; // データが既にキャッシュにあるかどうかを確認します。 if (cache.has(id) && !forceRefresh) { self.postMessage({ type: 'DATA_RESPONSE', payload: cache.get(id).data }); return; } // ... - 特定のコア数で適切に実行できるウェブワーカーの数を把握します。クアッドコアCPUの場合、1~9個の単純なワーカーを正常に実行できますが、9~15個ではUIに何らかの影響が生じ、15~30個では目に見える影響が現れます。それよりも多くなると問題が発生します。

- この計算には次のアルゴリズムを使用できます。

- 常に安全:

number_of_workers = navigator.hardwareConcurrency - 2 - ほとんど安全:

number_of_workers = (2 * navigator.hardwareConcurrency) + 1

- 常に安全:

- 長期間存続するワーカーや負荷の高いワーカーは、同時に実行する数を2~4個にすることを検討します。

- CPU負荷の高いタスクがある場合は、問題を引き起こす可能性のあるワーカーが同時に多数実行されないようにするか、システムに過負荷がかからないように手動で処理を管理します。

- この計算には次のアルゴリズムを使用できます。

- 同じファイルが再ダウンロードされないようにヘッダーを設定します。ライブラリの中には、ワーカーを使用して画像やその他のコンテンツをキャッシュするものもありますが、多くのライブラリでは、事前に定義された戦略パラダイムが使用されます。

- TVアプリでは、ナビゲーション時に画像が存在しない場合にワーカーがトリガーされることがよくあります。これは、情報を事前に読み込んで滑らかな動作を実現するためです。これらの画像がキャッシュされないと、すばやいナビゲーションが行われたときにシステムでワーカーが大量に作成され、動作のつっかかりやフリーズが発生しやすくなります。画像が適切にキャッシュされるように、ヘッダーが正しく設定されていることを確認します。

- キャッシュ戦略にはいくつかの種類があります。多くのライブラリでは、「キャッシュのみ」、「キャッシュファースト」、「ネットワークのみ」、「ネットワークファースト」、「stale-while-revalidate」のいずれかに加えて、有効期限などのその他のオプションが利用されます。

関連トピック

Last updated: 2026年1月14日